Will AI Replace QA Engineers?

Will AI replace QA testers? Yes - it's already doing so. Here's why it's such a tempting option for organizations, and what the future holds

TL;DR version: AI will eventually replace QA engineers. In fact it is already doing so.

But is AI really the root cause of the decline of distinct QA roles, or has it simply accelerated the move towards a more integrated approach to testing?

We'd suggest the latter. Organizations have been moving away from 'over-the-wall' QA for a decade now - it's clunky, expensive, and can't keep pace with the rapid pace of deployment the current digital landscape demands.

So, AI will replace the last few QA teams left standing, but that might well have been a done deal anyway. Its impact on engineering-led testing practices and their workloads is arguably just as impactful - we take a deeper dive into both in the article below.

AI Will Replace QA Engineers Because Traditional QA is Already Dying

With AI testing solutions now widely available and dedicated QA roles decreasing rapidly, it's tempting to see AI as the root cause of this decline.

In fact, organizations have been finding alternatives to separating QA and engineering for the best part of 10 years. Both Yahoo and Microsoft moved away from over-the-wall QA teams back in 2015 - and the rest of Big Tech has done similarly.

Separating your QA roles from development isn't an ideal way of testing for techbusinesses in 2025. Here's why:

- It's expensive - a QA team of 10 could easily cost $1m per year. Add in infrastructure, training, and management costs, and QA could easily eat up 25% of your budget or more.

- It's slow - the best performing apps have teams that deploy code 200x more frequently. External QA is a barrier to this due to the bottlenecks it creates.

- It creates disconnected organizations - handoff models of QA encourage knowledge silos, in which QA teams lack a deeper understanding of the codebase, and engineers don't fully understand an organization's testing methodologies. Engineers write code that's difficult to test, and testing takes longer as a result.

- It delays releases - identifying bugs late in the development cycle makes them more expensive and more difficult to fix, causing knock-on delays in your release schedule.

Businesses can solve point 1 by outsourcing to a separate QA organization. As in other business areas, offshoring will cut costs - but ultimately it won't solve the fact that separate QA roles build silos and slow down releases in a competitive digital environment - in fact, by adding an entirely separate organization into the mix, it can often exacerbate problems.

So what can engineering teams do instead?

Separate QA testing roles aren't dead, exactly - but they are dying. So where are we at currently, and how will AI testing tools help?

Increasingly, organizations are integrating testing and development, so that engineers own the testing process alongside the code they create. Engineers think about testability from the outset, so that testing can run alongside development to catch issues when they're cheaper and easier to fix - an increasingly popular methodology known as 'shift left' testing.

Here's how that works:

- Engineers consider testability while breaking down user stories

- Engineers plan code structure with testing in mind, ensuring modular and easily testable components

- Engineers write code in small, testable units

- Engineers create and run unit tests for each component as it's built

- Engineers integrate new code with the existing codebase, running integration tests

- Peers review both code and tests, ensuring comprehensive coverage

- Automated tests run with each commit, catching issues early

The pros of engineering-led testing

- You eliminate technical debt created by engineers writing code that's difficult to test

- You eliminate knowledge silos across your organization

- You save money on hiring separate QA roles

- Your engineers can use shift left methodology to test early to find and fix bugs earlier, reducing costs and delays to releases

- You can integrate testing with CI/CD processes to speed up release cycles

The cons of engineering-led testing

- You shift more responsibility onto your engineers, so they spend may spend more time running tests than building new features

That single con is a major one, and perhaps the reason why the shift to test-led development hasn't been all encompassing yet.

Test automation tools aren't a new thing - engineers have been using them for decades - but writing test scripts still takes time, as does building test environments and maintaining scripts. And then there's the fact that traditional test automation has always been tricky for larger, more complex tests like end-to-end tests.

This has left organizations with a choice. Save money, eliminate silos, and seta course for total CI/CD integration OR free up your engineers to spend more time building new features by handing testing off to a separate QA team.

For smaller organizations with limited engineering resources (hey, we can't all be Google), outsourcing QA is still a tempting option. Perhaps that's why QAaaS models, which combine access to AI tools with a pool of human testers, have grown in popularity over the past couple of years.

Why AI is the Answer

With AI testing tools, you don't have to make that choice. You can integrate testing and development and automate your most complex tests, whilst giving your engineers time back for valuable project work. Unlike traditional automation, AI testing tools require minimal human input.

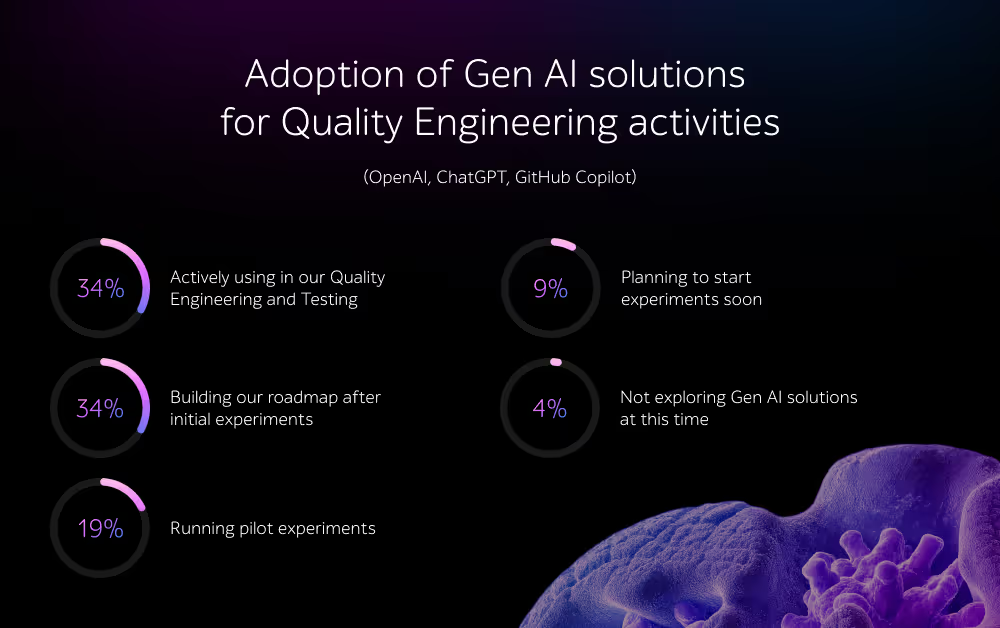

Organizations understand this - according to the 2024/25 World Quality Report, over two thirds of organizations (64%) are either actively using AI for QA, or building an implementation roadmap. Meanwhile, just 4% of organizations have no plans to explore AI testing solutions.

Source: https://www.linkedin.com/pulse/navigating-qa-trends-2025-a1qa-e3atf/

What do AI Testing Tools Do, and Why Are They the Answer?

Let's take a quick look at what today's AI testing tools offer:

Codeless test script creation

Why code when you could simply describe what you want your tests to do in plain English, and let AI take care of the rest? No, it's not a pipe dream - you can do that right now thanks to Momentic and other AI tools' use of natural language processing, and save hours of work on test script creation.

Self healing tests

Say goodbye to tedious hours of test maintenance with self healing tests that automatically update when changes are made to your application.

Enhanced analytics

AI tools can predict occurrence defects based on trends and patterns in previous code releases. This helps engineers prioritize their time and take the appropriate precautions when working on particularly high risk areas - and reduces the chances of last-minute defects derailing launches.

Real-time test visibility

Watch AI run your test in real time for ultimate insight into how users interact with your app - with automatic screenshots and recordings for reference.

Workflow integration

Integrate your testing tool with your chosen CI/CD provider and your day-to-day workplace tools (Jira, Slack, and more) to speed up response times and increase time to market.

And, with the emergence of AI testing agents as a viable technology, we're at a point where AI technology is about to become even smarter, and easier to embed into your day-to-day workflows. Soon, AI agents will be able to intelligently infer usage patterns and edge cases and perhaps even submit PRs to fix the bugs they encounter.

Why Are These Features So Attractive?

AI testing tools allow you to:

- Expand your test coverage significantly

- Run different types of tests, from quick unit tests to complex end-to-end tests

- Integrate directly with your CI/CD workflows

- Test as you write, for early identification of defects

- Minimize human error involved in repetitive QA processes

By implementing an AI testing solution, you extend the benefits of integrating testing and deployment - an even stronger codebase, even faster releases, and an even more responsive team - without extra strain on your engineers workload.

And, given that these benefits will cost you a fraction of what a QA team(internal or outsourced) would, the efficiency gains will be too significant for organizations to ignore.

We've already seen the integration of QA testers within Agile teams, then the gradual merging of testing responsibilities into engineering roles. AI will remove the majority of testing workloads from humans entirely, and take the last of the traditional QA testing roles with it.

Final Words: Why AI Will Replace QA Engineers

If you think this makes it sound like we're moving towards a 'black box' approach to testing, we agree - and we think that that's a good thing.

If there are fast, reliable, and affordable testing tools available, there's no reason why developers need an intimate knowledge of the process itself.

Like I mentioned in the black box testing blog:

Most developers never look at the internals of how a compiler does its magic.It's a tool we rely on to translate our code from a high-level language to something machine-readable; we only care if it succeeds or fails.

Developers don't need to understand, maintain, and scrutinize their test suites. They shouldn't need to give test code the same attention as production code. The vision for testing is that you shouldn't have to micro-manage the intricacies of the testing process. Like compilation, testing should "just work" the coverage is comprehensive, the results are reliable, and if any issues are found, they're flagged or even automatically fixed.

Momentic: Your New AI Testing Tool?

"Momentic makes it 3x faster for our team to write and maintain end to end tests."

Alex Cui, CTO, GPTZero

We'd love to see if Momentic's AI testing tools could help you optimize your software testing life cycle.

If, like Alex and his team, you're keen to save over two thirds of the time you spend on key testing processes, why not schedule a conversation with our founder?

FAQs

- Will AI replace QA engineers?

Yes, AI will eventually replace most traditional QA roles and it’s already happening. - Is AI the main reason QA roles are declining?

Not entirely, the bigger shift is teams moving away from “over-the-wall” QA toward integrated engineering-led testing. - Why is separate QA seen as a problem?

Because it’s expensive, slows releases and creates silos between engineering and QA. - What’s the alternative to traditional QA teams?

Engineering-led testing + “shift-left” practices where engineers own tests alongside the code and run automated tests with each commit. - What do AI testing tools actually add?

Codeless test creation, self-healing tests, analytics, real-time visibility, and CI/CD integrations, making complex automation easier with less human effort.