Software Testing Will Soon Be A Black Box, And That's Good For Sword-Fighting

Why software testing will soon be a black box, and why that's a good thing for developers.

Most developers never look at the internals of how a compiler does its magic.It's a tool we rely on to translate our code from a high-level language to something machine-readable; we only care if it succeeds or fails.

This should be the goal of testing. Developers don't need to understand, maintain, and scrutinize their test suites. They shouldn't treat test code a sfirst-class citizens, giving it the same attention as production code. The vision for testing is that you shouldn't have to micro-manage or be intimately aware of the intricacies of the testing process. Like compilation, testing should "just work" the coverage is comprehensive, the results are reliable, and if any issues are found, they're flagged or even automatically fixed.

With the availability of generative AI agents, machine learning-based coverage, and techniques to analyze user behavior and code paths, we're at an inflection point where automated testing can be far more sophisticated. AI agents will be able to intelligently infer usage patterns and edge cases and perhaps even submit PRs to fix the bugs they encounter.

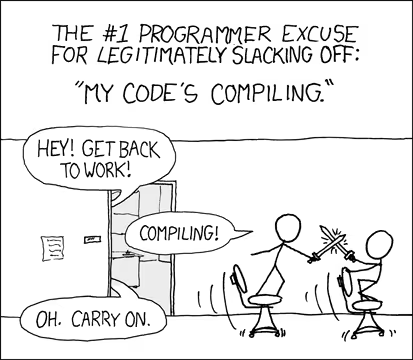

You'll press test and go and have a sword fight.

(source: xkcd 303)

What Testing Can Learn From Compilation

Today, compilation is automatic and reliable; developers focus on writing code, not on the minutiae of translation.

But it wasn't always so. In the early days of programming, software was written in assembly or machine code, with every detail handled manually. High-level languages and compilers existed, but they weren't trusted. They were janky, and early compilers often produced code that did not perform as well as hand-written assembly.

Developers would spend countless hours hand-optimizing their assembly code, arguing that automated compilation couldn't possibly understand the nuances of their specific use case. Sound familiar? It's the same argument we hear today about AI-driven testing not being able to understand the "special requirements" of our applications.

But compilers got better. A lot better. Not perfect, but to the point that if someone told you they were rolling-their-own compiler, you'd think they'd been rolling something else. This is because:

- Academic research and industry investment led to dramatic improvements in optimization techniques, making compiler-generated code often better than hand-written assembly.

- The development of robust intermediate representations and analysis frameworks allowed compilers to understand and optimize code at a deeper level than humans could manually track.

- The standardization of compilation techniques and the emergence of battle-tested compiler infrastructures (like LLVM) created a foundation of trust and reliability.

- Market forces drove the evolution. Companies couldn't afford to keep hand-optimizing code as applications grew in complexity, so they trusted and helped improve compiler technology.

This is where we are with AI testing; the same tenets hold true.

- The rapid advancement of large language models and code understanding has led to AI-generated tests that catch edge cases that human developers miss. Just as compilers outperformed hand-written assembly, AI testing is beginning to surpass manual test writing in both coverage and effectiveness.

- Modern AI systems can build sophisticated internal representations of codebases, understanding the syntax and semantic relationships between components, user behaviors, and system dependencies.

- The emergence of standardized AI testing frameworks (cough-cough, Momentic) and proven methodologies (like Cursor, Claude, and similar tools) is creating a foundation of reliability that will only strengthen as these systems mature and become battle-tested in production environments.

- Economic pressures are already forcing this transition. Companies simply cannot afford to maintain comprehensive manual test suites and QA teams. The cost of missed bugs in production far outweighs any perceived benefits of hand-crafted tests.

We are not at the sword fight stage yet. But it is coming. And it will be good.

Why You Want Black Box Testing

Garry Tan wants black box testing.

You should too. Testing sucks-that isn't a secret. It is time and resource-intensive. Manual test writing and maintenance consume a lot of time, especially for large codebases, and QA teams or developers spend hours writing and re-writing test cases for every change. It just isn't an efficient system.

Test coverage is also often incomplete. Even with dedicated testing teams, it's easy to miss edge cases, and changes in requirements or code can leave tests out dated. Traditional testing might only happen in certain stages (e.g., nightly builds, staging environments), and, to Garry's point, bug detection can be slow, and bug fixing is even slower.

But AI testing changes everything. It offers near-infinite scalability. As your application grows in complexity, traditional testing approaches break down-more features mean exponentially more test cases to write and maintain. AI-based testing systems scale automatically with your codebase. The AI agent simply adapts and generates new test scenarios as your application evolves, maintaining comprehensive coverage without additional effort from your team.

This continuous nature of AI testing is a game-changer:

- Unlike human testers who need breaks and can only work in defined shifts, AI testing systems operate 24/7, constantly iterating and improving their test coverage. Every code change becomes an opportunity for immediate testing and validation rather than waiting for the next test cycle.

- This continuous operation leads to dramatically faster bug detection. Instead of discovering issues during scheduled testing phases or, worse, from user reports, potential defects can be flagged immediately as they're introduced. The system can catch subtle regressions and edge cases that might slip past human testers in real time.

Perhaps most importantly, AI testing transforms how we allocate our human resources. Instead of having skilled developers and QA engineers spend countless hours on repetitive regression testing, they can focus on more security audits, exploratory testing, and complex edge cases that require human insight These valuable tasks become their primary focus, while the AI handles the grunt work of comprehensive testing.

But why a "black box?"

But that is all just about general AI testing. Why do we think the "black box"will prevail?

This goes back to our original point-you just don't need to know any of the internals. Developers shouldn't have to think about the details of coverage or test generation. They should write code and rely on the black box to do comprehensive testing. And just like with a compiler, you care about the output (does the code compile? Do tests pass?), not about the intricacies of lexical analysis, parsing, optimization, etc. Just as we trust our IDEs to handle syntax highlighting and code completion without understanding their internals, we should trust our testing systems to handle coverage and validation autonomously.

It is about cognitive load. Developers are simple folk-they need that headspace for other things.

(source: xkcd 2510)

How The Black Box Will Work

We don't know, that's the magic!

However, we can sketch out how such a system would operate. At its core, the black box testing system would have several key components that work in harmony.

Monitoring

An AI constantly observes user sessions, identifying which paths through the code are most frequently traveled. It collects data on feature usage patterns and logs any anomalies or unexpected behaviors. This creates a dataset of real-world usage that goes beyond what traditional test coverage metrics can capture.

Extrapolation and modeling

Using the collected data as a foundation, the AI doesn't just test what it sees-it infers what it might see. It identifies less-traveled or edge paths in the code and automatically generates test scenarios for them. This is where AI outpaces traditional testing approaches: it can imagine and test scenarios that haven't happened yet but could.

Execution and reporting

It runs these generated tests in staging or development environments, carefully flagging any failing scenarios. The AI can go beyond simple pass/fail reporting.It can analyze failures and propose suggestions for fixing them, understanding the context of the failure and potential solutions based on patterns it has learned.

CI/CD pipeline

When the AI identifies a new path through the code or discovers a potential bug, it automatically tests, logs, and proposes solutions. This process does not need human orchestration-it just happens, much like how your compiler works without you needing to think about its internals.

Beyond the Black Box

Imagine that your testing process is as straightforward as running npm run test or clicking a "build & test" button. Under the hood, an AI orchestrates thousands of test scenarios.

When code changes break existing assumptions or introduce new behaviors, the AI adjusts or regenerates tests automatically. In the (not too distant) future, you might see automated PRs with suggested patches, plus an entire pipeline that merges these fixes if they pass further checks. This closes the loop between "find a bug" and "fix the bug" with minimal human intervention.

This is what we're working on at Momentic. If you want to black-box your testing, reach out here. If you want to build the black box, we're hiring (swords provided).