Fetch Mocking With Playwright in Next.js 15

A deep dive into how to intercept and mock API responses using Playwright and Next.js

Let's say you're doing what everyone is doing right now (including us) and building AI into your application.

You are creating a beautiful UI, replete with loading states, streaming responses, and error handling. But there's a catch-every time you want to test your changes, you have to make actual API calls that are slow, costly, and unpredictable. Your development flow grinds to a halt as you wait for responses and deal with the un-robustness of the API. Every call costs, so your testing budget starts to look concerning.

This is why fetch mocking exists. Fetch mocking allows you to intercept and simulate API responses during development and testing, letting you work faster and more reliably. And now, an experimental feature in Next.js 15 is making this process easier using Playwright andMSW.

The What & Why of Fetch Mocking

Fetch mocking intercepts network requests made by your code and returns predetermined responses instead of making actual HTTP calls. When you mock the fetch API, you create a controlled environment where network requests behave exactly as expected.

At its heart, fetch mocking works by intercepting outgoing HTTP requests before they leave your application. This interception happens at the network level, allowing you to capture requests before they're sent, inspect request details (such as headers, body, and method), and then decide how to respond.

Once a request is intercepted, you can return any response you want. This includes:

- Success scenarios with specific data

- Error cases (400s, 500s)

- Network failures

- Delayed responses to test loading states

Why mock fetch calls? The first reason is predictability. Real APIs can be unpredictable. Data can be different each time (even more so with AI APIs, which are non-deterministic by nature). You can easily be rate or quota-limited, and that's before you wrap in network issues.

The point of testing is to be predictable so you can be sure what you are testing works as intended. Mocking ensures your tests run against consistent, controlled data.

Testing with actual API calls is also slow. Not glacial, but slow enough that tests can timeout. Again, you're left with the problem of not knowing whether the timeout was due to the API or the UI.

Finally, for paid APIs like OpenAI, each test run costs money. Integration tests might make hundreds of calls, so development and debugging can become expensive.Have you ever sat there refreshing your usage page in your API dashboard, hoping that you aren't blowing through your Ramp budget? Then fetch mocking is for you.

Adding MSW & Playwright into the mix

Unlike traditional mocking libraries that replace the fetch function at the application level, Mock Service Worker (MSW) intercepts requests at the network level through a service worker. A service worker acts as a proxy between your application and the network, allowing MSW to intercept and handle requests in away that more closely mirrors real-world API interactions. This makes your tests more reliable and your mocks more realistic, as they operate at the same level as network requests.

Because MSW operates at the network level, the same mocks can be used across your entire testing pipeline. This "write once, use everywhere" approach means less code duplication and more consistent testing across your application. MSW's architecture also aligns particularly well with Next.js 15's server-side features and testing philosophy:

- Supports streaming

- Works with server-side rendering

- Handles both API routes and external requests

- Integrates naturally with Playwright for E2E testing

It is the server-side rendering we're most interested in today. If you call AIAPIs, you do it on the server and then pass the data (or rendered page) to the client. Playwright has become the go-to choice for E2E testing Next.js applications, but it didn't have an easy answer. The Next team has been working on an experimental test mode for fetch mocking with Playwright.

Let's see how that works.

Using Experimental Playwright Fetch Mocking With Next.js 15

OK, you are making a call to the OpenAI API within a component like this:

export default async function ChatPage() {

const response = await fetch("https://api.openai.com/v1/chat/completions", {

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization: `Bearer ${process.env.OPENAI_API_KEY}`,

},

body: JSON.stringify({

model: "gpt-4o",

messages: [{ role: "user", content: "write a haiku about ai" }],

}),

});

const data = await response.json();

return (

<>

<h1 className="mb-16 mt-32 text-center text-6xl font-bold">hAIku</h1>

<p className="mx-auto max-w-xl whitespace-pre-line text-center text-4xl italic leading-relaxed text-rose-600">

{data.choices[0].message.content}

</p>

</>

);

}

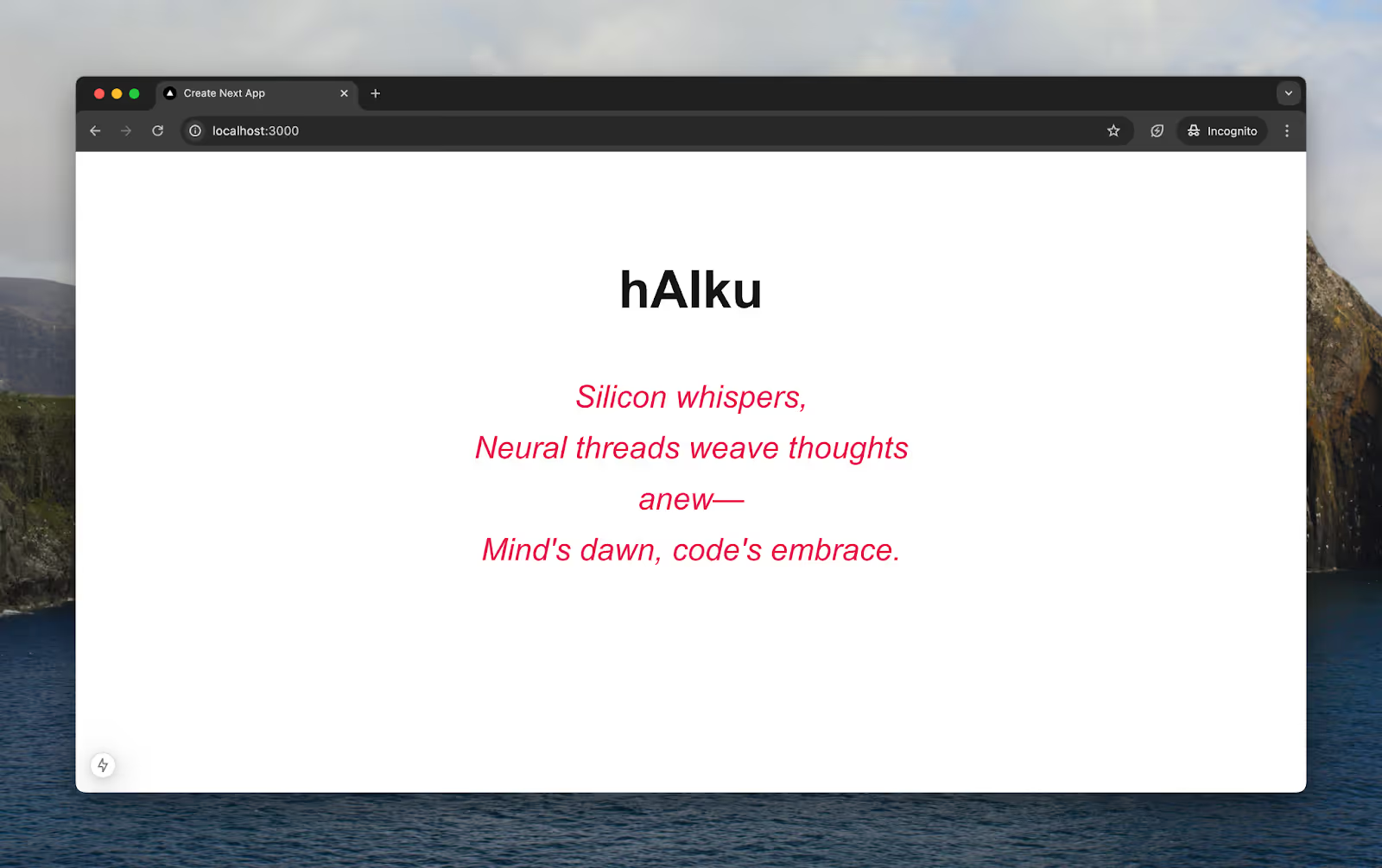

We get:

Whenever we wanted to update the UI there, we called the OpenAI API anew. Cents down the drain just to try different shades of red. Time for some fetch mocking.

If we had "use client" at the top, we would have an easier way to mock this data. For client-side rendering, we could use Playwright's built-in page.route(). But as we're on the server side, we must proxy our requests. This is where the new experimental fetch mocking and MSW come in.

First, let's add this to our next.config.ts:

// next.config.ts

import type { NextConfig } from "next";

const nextConfig: NextConfig = {

experimental: {

testProxy: true,

},

};

export default nextConfig;

This tells Next to use the experimental testProxy. Next, let's install our testing dependencies:

npm install -D @playwright/test msw

Before we add our tests, we also need to create a playwright.config.ts file torun our tests:

// playwright.config.ts

import { defineConfig } from "next/experimental/testmode/playwright";

export default defineConfig({

// Other config

testDir: "./tests",

// Let Playwright start the Next.js project before running the tests

// See https://playwright.dev/docs/test-webserver

webServer: {

command: "npm run dev",

url: "http://localhost:3000",

},

});

Here, we're configuring Playwright to automatically start our Next.js development server before running tests. This ensures our application is running and accessible at localhost:3000 when our tests begin.

Now we'll write our test in tests/haiku.spec.ts:

// tests/haiku.spec.ts

import {

HttpResponse,

expect,

http,

passthrough,

test,

} from "next/experimental/testmode/playwright/msw";

test.use({

mswHandlers: [

[

http.post("https://api.openai.com/v1/chat/completions", () => {

return HttpResponse.json({

choices: [

{

message: {

content:

"Silicon dreams flow

Neural networks pulse with life

Future awakens",

},

},

],

});

}),

// allow all non-mocked routes to pass through

http.all("*", () => passthrough()),

],

{ scope: "test" },

],

});

test("displays an AI generated haiku", async ({ page }) => {

await page.goto("http://localhost:3000/");

await expect(

page.getByText(

"Silicon dreams flow

Neural networks pulse with life

Future awakens",

),

).toBeVisible();

});

In this test, we use MSW to intercept the OpenAI API call and return a predetermined haiku instead. The test then verifies that our component correctly displays this mocked response. We're using the scope: "test" setting to ensure these mocks are isolated to this specific test, preventing interference with other tests. This also uses a passthrough so any other API calls continue to their proper endpoints.

With that done, we can run our test:

npm exec playwright test --ui

Ideally, this should pass:

Running 1 test using 1 worker

? 1 haiku.spec.ts:31:5 displays an AI generated haiku (594ms)

1 passed (6.2s)

We've successfully proxied our API call to a mock. We can now start building out our application safe in the knowledge we're not accruing crazy OpenAI bills or worrying about our rate limits.

What would be next?

- Adding tests for error states-what happens when the API returns a 429 rate limit or a 500 error?

- Testing loading states by adding artificial delays to our mock responses

- Building out a more interactive UI where users can input their prompts and testing the full interaction flow

- Adding tests for different response formats and edge cases

This shows how you can build accurate E2E testing into your application. With Next.js 15's experimental test proxy, MSW, and Playwright working together, we can create comprehensive test coverage that includes client- and server-side rendering scenarios. This approach saves on API costs during development and provides a more reliable and faster testing environment that closely mirrors production behavior.