Embracing AI is the only way to make E2E tests work

The way teams implement E2E tests is fundamentally broken. How can we fix it? By embracing AI

A few weeks ago, you likely saw this post:

This sucks. A major product and engineering team doing away with tests. But let's make it clear. DHH, Gergely Orosz,Kitson Kelly. These people are right.

The way teams implement system tests is fundamentally broken. In most cases, the juice isn't worth the squeeze. But, at Momentic, our thoughts on how to fix this are different. Instead of throwing away the juice, let's make squeezing a whole lot easier.

The profit and pain of E2E testing

The workload associated with E2E tests now outweighs the value you'll get from them. But it's not that the tests aren't valuable; it's that the workload is the dominant term.

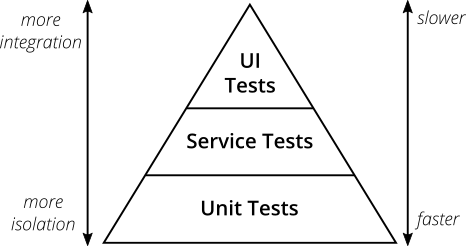

The value in E2E tests is ensuring your application works as expected from the user's perspective. They validate the entire flow of your application, from user interactions to back-end processing and data persistence. They are the UI tests in the testing pyramid:

Without the safety net of comprehensive end-to-end testing, teams risk deploying changes that introduce unexpected bugs or break critical user flows, impacting customer satisfaction. Unit and integration tests provide valuable coverage at the component level. Still, they can't fully replicate the complex interactions and dependencies that exist in production, leaving the system vulnerable to high-impact failures that may go undetected until reported by end-users.

The pain, however, is significant. They are notoriously flaky, slow, and difficult to maintain. As your application grows in complexity, the number oftest scenarios and edge cases explodes exponentially, leading to a massive test suite that takes hours to run and significant engineering costs to create, maintain, and run

This lack of maintainability is the problem teams are butting into. Teams get sucked into developing tests instead of developing product. This slow feedback loop hampers development velocity and makes delivering new features and bug fixes challenging. Even minor modifications can cause tests to break, requiring constant updates and maintenance. This maintenance burden can quickly become overwhelming, especially for teams with limited resources.

So we have two options.

On one side, we have the DDH route of throwing E2E tests into the sea. Their core argument is simple: Going overboard with system testing ends up being a net negative. The maintenance burden and slow feedback loop of a massive, flaky test suite outweigh the benefits, hurting overall development velocity and agility.

Or finding a better way to do tests.

Enhancing & scaling test automation with AI

Here's where we agree: The maintenance burden and slow feedback loop of a massive, flaky test suite outweigh the benefits, hurting overall development velocity and agility.

So we have a different answer. Instead of throwing out tests, they need to be made easier and faster. The barrier to entry needs to be lowered for test building and maintenance. must be made more efficient instead of throwing out tests.

So, tooling has to get better. And that tooling has to include AI.

Currently, writing test code, then maintaining the tests and manually running them are the most significant time sinks. AI can replace each of those steps:

- AI can automatically generate test cases based on the application's user interface and expected behaviors, saving countless hours of manual test writing. This intelligent test generation ensures that edge cases and complex scenarios are adequately covered, reducing the burden on QA teams.

- AI can assist with exploratory testing by learning from user interactions and suggesting areas of the application that may require more attention. This targeted approach helps QA teams prioritize manual testing efforts, ensuring that critical paths and high-risk areas are thoroughly tested.

- AI-driven tools can enable auto-healing of broken tests, adapting to changes in the application's UI or flow without requiring manual intervention. This self-healing capability significantly reduces the maintenance burden associated with E2E tests, allowing teams to focus on delivering value rather than constantly updating test scripts.

You're writing less code for better effect. This allows you to reduce your QA teams to a single QA engineer or to integrate QA into the development cycle for application engineers. The engineers can have twice the coverage, and when you're not spending all your time writing and fixing tests, you can focus on building cool stuff for your users. Shipping features faster and making the app better-all that good stuff.

This is a better option than throwing away testing. Instead, we should embraceAI's ability to work fast and scale, leading to better testing and more efficient development.

Make E2E tests matter

The value of E2E testing is "ensuring your application works as expected from the user's perspective."

But a headless browser isn't a user. We use E2E tests alongside unit and integration tests to ensure everything works as expected. But a product is more than that. It has to feel right. How do you test for that?

With AI, we are entering an era of testing beyond functional correctness.AI-powered testing tools can help us evaluate our applications' user experience in previously impossible ways.

Imagine an AI that can interact with your application like a real user, providing feedback not just on whether a feature works but also on how it feels to use. This AI could identify points of frustration, confusion, or delight in the user journey, helping designers and developers refine the UI and create amore engaging experience.

This AI can read from user stories, customer service tickets, or PRDs to understand what the UI is supposed to feel like and where the design, functionality, and feel are off. It can then feed that information back to the engineering team in natural language to get them to understand where the feeling is off.

This is the future of testing-not just checking boxes but genuinely understanding and optimizing the user experience. With AI, we can create applications that don't just work but feel right. We can test for that intangible quality that makes a product delightful to use and do it faster and more efficiently than ever before.

System tests have succeeded

We don't think it's right to say, "System tests have failed." System testing has failed. Or, rather, it is nascent and currently not up to the job we're asking of it.

If we throw out all testing now, we'll produce worse products. We're building those tools at Momentic. AI-powered tests that remove the burden of testing-the real problem-from your team and place it on the shoulders of AI. An AI that can write your tests, test your pages, do it at scale, and give your feedback to make meaningful decisions within your product.